Companies are only beginning to assimilate the benefits of Big Data Analytics and Artificial Intelligence, and in parallel the consequences for their customers' privacy. Despite the benefits of using personal data and advanced analytics technology to get to know more about customers and their preferences, it is crucial not to forget the problems that working with personal and sensitive data could lead to.

In Data Analytics and Artificial Intelligence development, one of the most common problems is anonymization of personal behavioral data since it can be easily reidentified by using techniques such as inference, isolation, or information bindings with public data. As a consequence, the observations lose their anonymity, and this is one of the main risks of privacy, however the most unknown.

One significant concern for data-driven companies in the era of big data is a data breach, and this is a signal of ineffectiveness in the anonymization methods and in privacy policies in place by organizations.

Data privacy is a big concern

Today, companies that own and work with customers` data, also have a number of employees accessing large volumes of sensitive and private data. In many cases, these employees are not even part of the data science or the data analytics team, leading to misuse or fraudulent use of data. Despite current efforts by Information Technology (IT) and Data Governance departments, this access is especially vulnerable to this type of situation.

During the last few months, we have seen that more and more users and clients are worrying about the privacy of their personal data. According to a CISCO study (Cisco Consumer Privacy Study, 2021) (1), 86% of the respondents said that they were worried about their data security, and 50% of them said that they have changed or they are willing to change their service provider because of its privacy policy.

Not only clients and users are worried about privacy. Authorities and regulators are also conscious about the relevance of protecting personal data and creating rules that ensure the privacy of users and companies.

General Data Protection Regulation

In 2018, the European Union (EU) presented the General Data Protection Regulation (GDPR), where the EU put in practice the newests reforms related to data protection and privacy. The GDPR was the first step in terms of the creation of a common definition about the privacy rights of the citizens. Other countries like Brazil, Canada or China have created their own regulation, followed by others such as California with their CCPA or India with their PDPB.

Most of the current techniques used for anonymization, actually are pseudonymization techniques, as GDPR defines in the article 4 (2):

This article basically says that the data obtained by a pseudonymisation process are not anonymous, so, it has to be treated as personal information.

What about the classical anonymization techniques?

The classicals techniques used in anonymization like permutation, randomization and generalization have a common problem: These techniques could “destroy” the data, and as a consequence of it, the value and the information could be lost because of the aggressiveness of the methods. These methods do not only have this defect, but also that they do not guarantee that the information is 100% anonymous and secure.

Permutation

Permutation is a technique that consists of making alterations in the order of the observations in the database so that they do not correspond identically to the original information.

In spite of the appearance of being an effective technique of data processing, its biggest defect is that data can easily be reversibly permuted if this data is crossed with additional information. Another defect that permutation has is the loss of statistical value of the information in terms of correlations and relationships between columns, which implies a reduced predictive power.

Randomization

Randomization is another classical anonymization technique. This method consists in making modifications in the variables of the data applying random patterns that are previously defined.

The most recognised technique of randomization is perturbation or, in other words, noise addition. The methodology of perturbation consists in adding systematic noise to the dataset.

For example, in a dataset that contains dates about when a patient went to the hospital, this variable could be adjusted randomly by adding or removing the same number of days to the real date of the visit. In this case, instead of permutation, some relationships between observations and variables are preserved. Nevertheless, this is not a guarantee of the preservation at 100% of the privacy of the data because these patterns can be easily identified and it implies the re-identification of sensitive data.

Generalization

This last technique can be explained as a method that makes generalizations on the data, diluting its features. The philosophy of this technique is transforming the individual data into generic or aggregated data, which implies that it is not possible to identify a single record, but a cluster of it.

One of the most common methods used in generalization is the denominated k-anonymity. When k-anonymity is applied, the parameter “k” has to be chosen. This parameter will define the balance between privacy and the utility of the data.

However, if a high value of “k” is taken, all the problems in relation with privacy prevail as soon as the sensible information converges into homogeneous information. At this point, data could be attacked by the denominated “homogeny attacks”.

Some authors propose other methods, like I-diversity or t-closeness. However, even these variants are insufficient to guarantee the privacy of the information.

Conclusions

Despite all these options for anonymizing or pseudonymization data, cases about attacks and data breaches happen with an alarming frequency. The more open and interconnected data, the risk of new privacy attacks will increase exponentially if organizations do not take adequate action, negatively affecting their business and reputation.

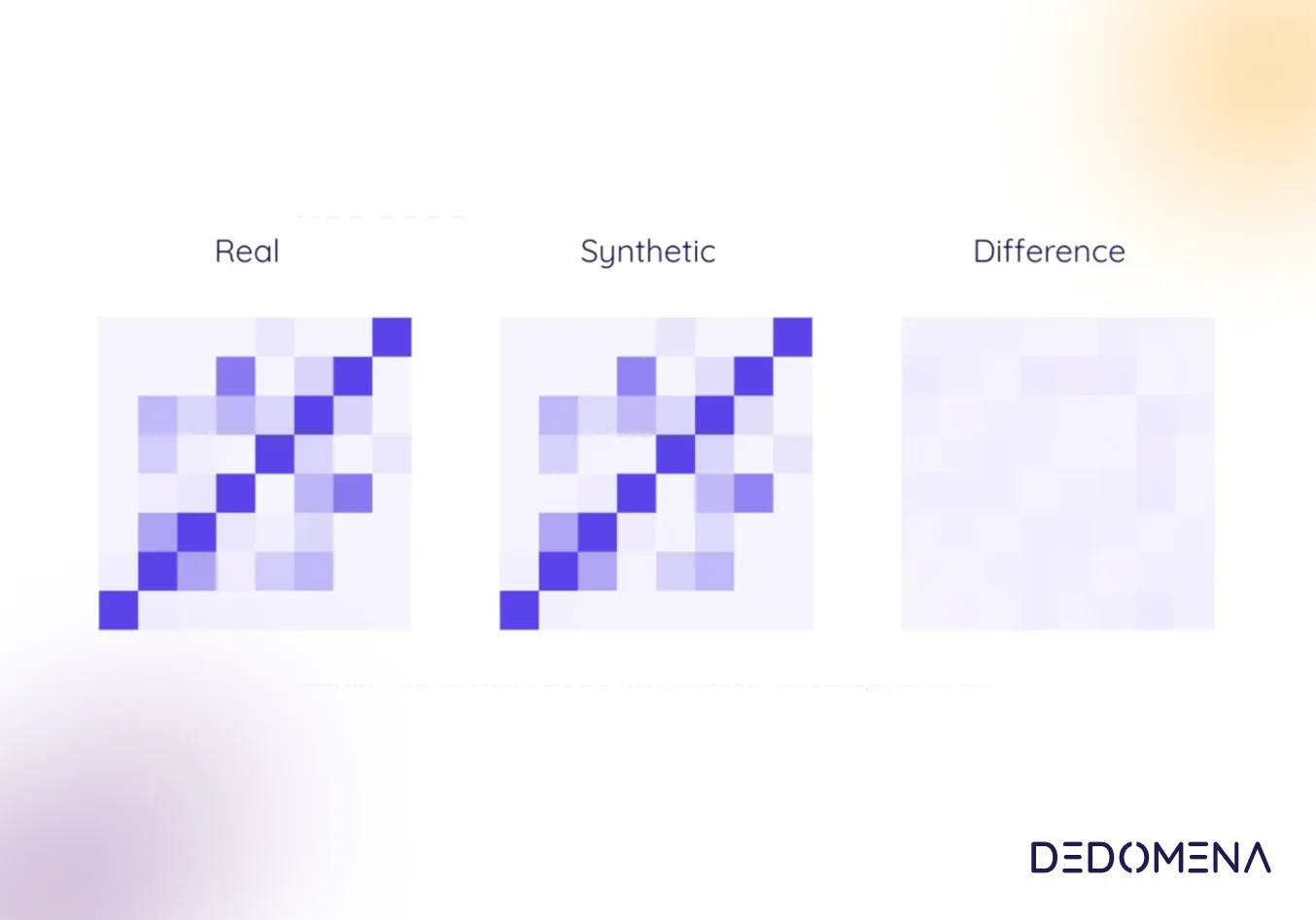

Regardless of the criteria that are taken to prevent the re-identification of personal and sensitive data, there will always be a balance between privacy and usefulness of the data. With current techniques, data that is considered completely anonymized will not have much statistical, informational, and predictive value, and vice versa.

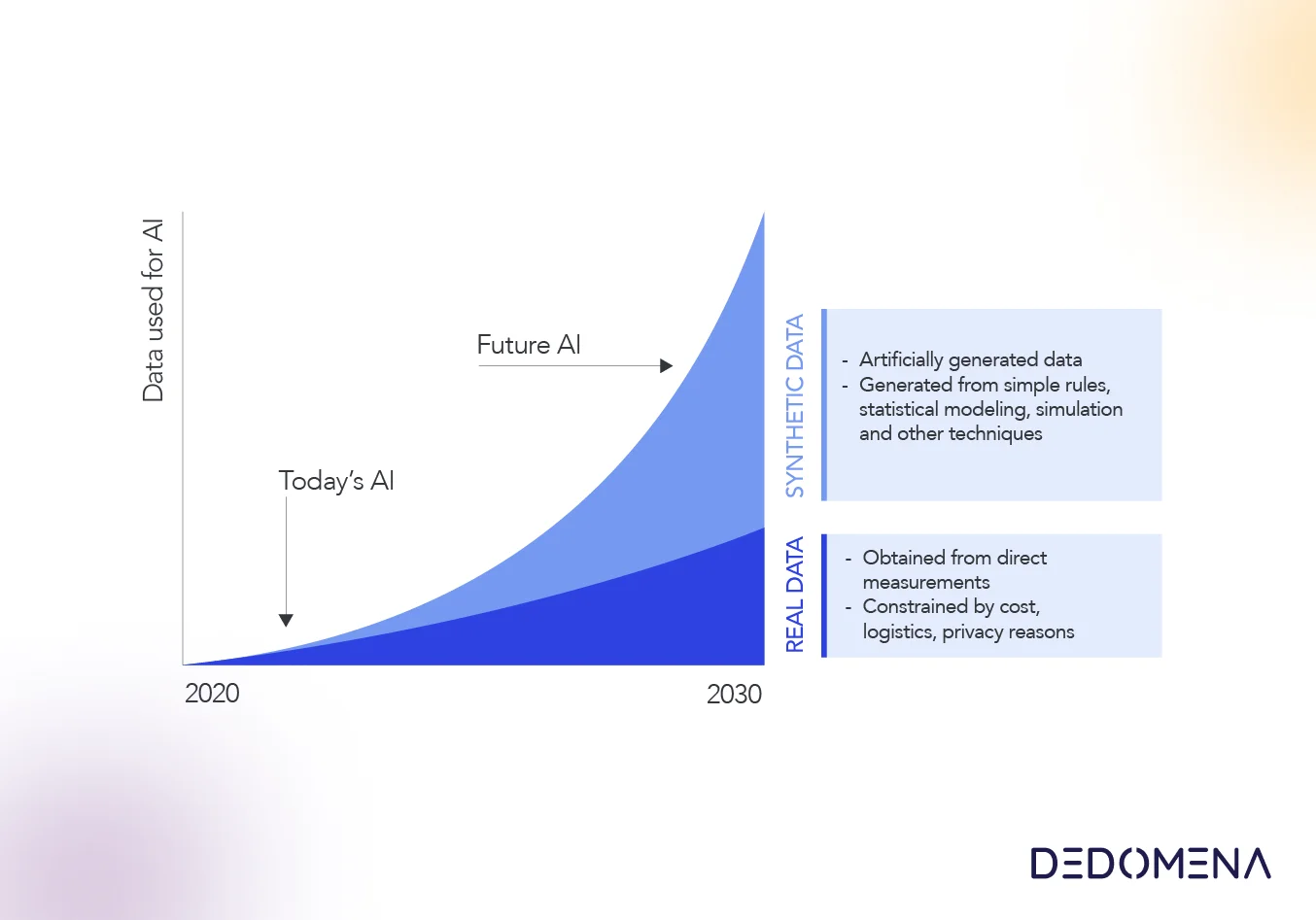

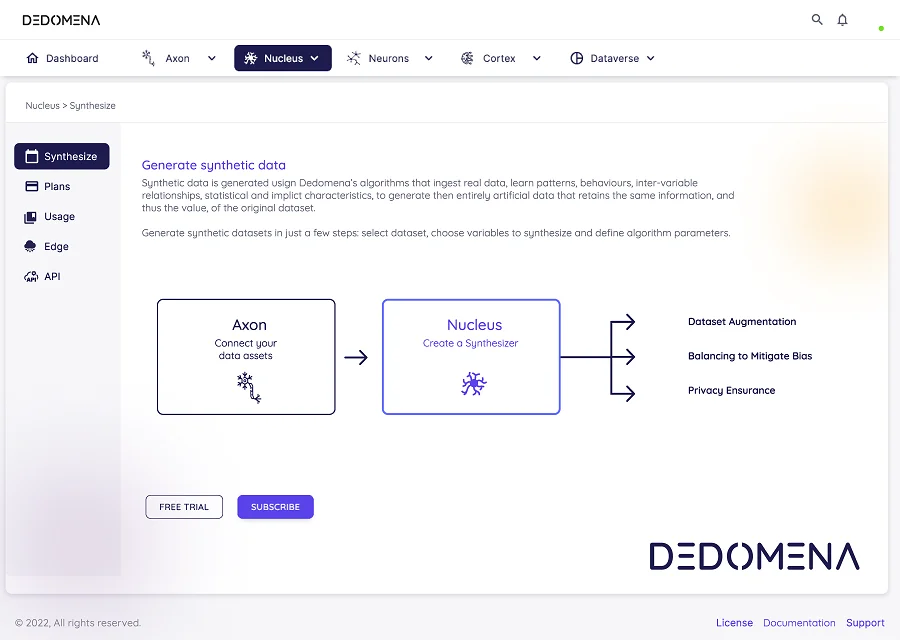

Data-centric companies have an important dilemma between privacy and utility, this usually leads to internal disputes between those responsible for ensuring the privacy of the information and those responsible for extracting value from it. However, it is probable that the solution to this dilemma has already been discovered: what if companies could create their own data?

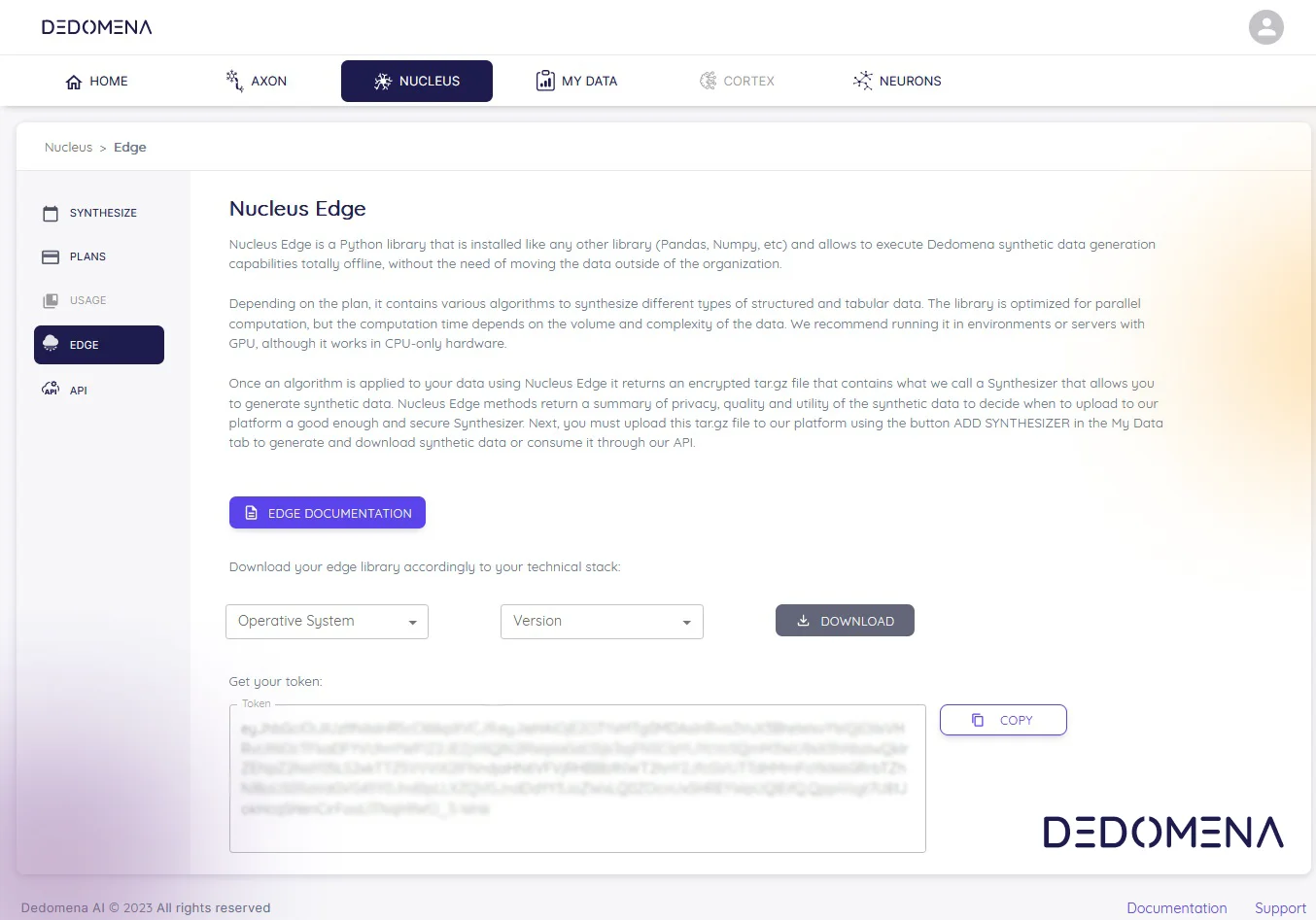

The solution is Synthetic Data.

If you want to know more about how synthetic data can boost your data initiatives , do not hesitate to contact us, or click here to discover all the newest and different applications of the future of data science: synthetic data.

References

1. Building Consumer Confidence Through Transparency and Control.

2. Article 4 GDPR. Definitions.